Business Intelligence is nothing but gathering useful insights of the business from the tons of data all organizations have. Again this information gathering must not take a lot of time, nor require very sophisticated deductions to make sense of it.

Legacy BI systems have always been plagued with problems like Fragmented, Inconsistent Data. The analysis was very Report-centric and hence restricted. This led to lots of data that was pretty Non-Intuitive to a new user.

Oracle today is a market leader in the BI space as it has addressed the basic needs for BI reporting that has been lacking all these years. The basic pillars to contribute to an insight driven enterprise has been.

· Empower Everyone – Every person is provided with relevant, complete information tailored to their role.

· Provide Real-time Intelligence – Deliver insight that predicts the best next step, and deliver it in time to influence the business outcome

· Use Insight to Guide Actions – Lead people to take action based on facts to optimize decisions, actions and customer interactions

These are the pillars of a BI enabled enterprise will drive the next level of value creation and competitive advantage for organizations.

Generally any Business Intelligence discovery session starts off with an excel sheet. The impact on that opportunity stands greatly enhanced, if the sales consultant is able to immediately demonstrate the capabilities of the very powerful (yet simple) features of the product.

A BI deduction derived from the OBIEE reports definitely adds the awe factor to the product. During the demonstration if you hear lines like “I didn’t know that about my department!!” consider your selling done.

This document will help you doing just that. The power to take the customers sample excel, designing a OBIEE solution based on that excel and to gain BI insights on the data , creates a greater impact rather than running through a 100 slides listing the capabilities of the product. Remember, generally it may be the very first time the customer is seeing a BI solution and “Simplicity” is probably the feature that needs to be demonstrated. At this point the customer is probably not interested in knowing jargon like disconnected analytics, Query Federation or the very powerful caching capabilities of the product. These feature are definitely powerful solutions built in that needs to be told, but after the customer has got hooked to the idea of a BI solution.

In this document I have taken a simple skills bank survey excel from a fictitious organization and used OBIEE to design requests based on the data. Features like Common Enterprise Information Model, Guided Analytics, and Dash boarding Capabilities etc are some of the features that have been highlighted in this simulated solution.

We start off with a simple excel sheet on our journey of BI discovery.

The excel sheet has the following data columns.

| |

| |

| |

| |

| |

| - Department Id Description

|

| |

| |

- Years In Current Job Range

| |

| |

| |

| |

At this point, the Data-Warehousing consultant in you may be calling out to design Facts and Dimensions. Remind yourself that the customer is new to BI itself and does not understand too much about Data warehousing and star schemas. However in an ideal implementation case, this is the point you will design your data warehouse and out in place various ETL techniques to populate your warehouse that will form a source to your OBIEE.

You may directly use the excel sheet as a source to OBIEE using ODBC. However in this example I have sourced the data into an Oracle Database Table using Oracle Data Integrator (ODI).

I have done this primarily for 2 reasons

- An Oracle Database gives me greater flexibility to remodel this solution at a later point in time if required.

- I am introducing the customer to an ETL tool that usually is incorporated when dealing with large enterprise wide BI solutions.

Next we need to put in place our Metadata that will form the source to the Answers layer. We first have to import the table we have just created in our backend. This step assumes you have already created a ODBC connection to the respective Oracle schema.

Point out to the customer the option to import a Multidimensional Data Source. Again reminding yourself about the customer being new to Data warehousing, explain in brief about the need for multidimensional view of a data ( eg, No . Of Units sold viewed against a combination of Region\Time\Customer Profile\Product Profile\ Customer Satisfaction etc ). This may get the ball rolling for up-selling Oracle Essbase at a later point in time.

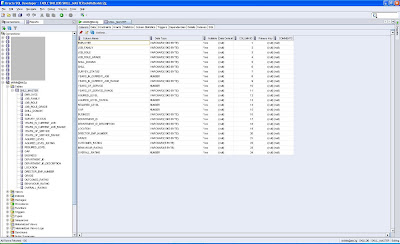

Point out the three separate layers in the Oracle BI Server Admin Tool you have just opened - Physical, Business Model & Presentation.

The Physical layer is an exact depiction of the target physical database(s). The Oracle BI EE platform is 100% data agnostic and has no preconceived notion of how the data is modeled. The Key Point here is that the Analytics platform should not dictate the data design. Some BI tools require the data to be modeled in a particular way, such as a star or snowflake schema. The Oracle BI EE tool maps in the data the exact same way in which the customer designed that data to support their business processes. No data is moved. Oracle BI EE simply references the way the data looks from the database system catalog.

Next we move to defining the Business Model in the BMM portion of the BI Admin tool.

· It provides a simplified “business view” of information available to all BI tools and all users in the organization regardless of physical data locations or structures. In this way, you can almost think of the business model as a sort of firewall between the users of the information and all the mechanics of the physical data access layer.

· Data is separate from the model. So let’s say that you have data on inventory in a certain operational data store that is mapped in, and then someone decides that system is going to be retired, so you have to map to a different source to get that inventory data. You can simply remap to the new schema, and it won’t impact all the end user reports because there is a separation or abstraction layer between the physical data layer and the logical business model layer. This allows a high degree of flexibility for IT to make changes to data structure without breaking thousands of end user reports, dashboards, etc.

· It provides a place where business metrics and calculations are defined, enabling more consistency and alignment in the organization. For example, let’s say the organization has a particular way in which they define customer value, or product profitability. These calculations and metrics can be defined in the metadata and made available to users – define once, deploy everywhere.

We can create a logical table in the BMM layer and drag the required columns from the Physical layer we had previously imported. From the analysis of excel there are 3 distinct areas of information

- The Skill Details

- The Job details

- The rating for an employee against each skill. Apart from rating other employee specific information like region, job grade etc also form a part of this logical table

We will hence create 3 different logical tables for each of the above logical groupings

We need to now relate these 3 different logical sources. The BI Admin tool provides you with a GUI which enables you to create these relations with ease.

There is a concept of something called “Complex Join” when you join 2 sources. To put it simply this type of join paves the way for the BI server to intelligently relate 2 sources that may come from very different technologies. This is the way to establish joins in cases like ours where we are using a flat Excel sheet.

Next we need to define the keys that will differentiate the rows in the supporting logical tables namely Jobs and Skills.

On referring back to the excel sheet, in jobs the combination of JOB_ROLE and JOB_FAMILY form a unique combination. Similarly for Skills the SKILL column has unique values.

The Business Model is also where the administrator creates the default Drill-Paths or Hierarchies. In our excel sheet there are 2 distinct hierarchies of information

- Skill Domain Drills down to Skill

- Job Family Drills down to Job Role

E.g. If I click on Consulting Service Delivery, should drill down to the following:

· Executive Director

· Senior Director

· Director

· IC4

These hierarchies are called “Dimensions” in OBI Tool. We can create them by simply right clicking the corresponding support logical table and selecting the “Create Dimension” option.

The resultant dimension is not complete. As JOB_FAMILY forms a parent for the JOB_ROLE, you need to drag that field to the higher level.

You may follow the same steps for defining the Skills Hierarchy as well. Below is the drill down effect we are trying to achieve in the front end.

Finally we can now populate the Presentation layer of the tool. The Presentation layer is a second layer of abstraction that sit-on-top of Business Model. You will simply drag-and-drop the logical applications you have just created from the Business Model, into the Presentation layer. The Presentation layer is presented to end-users in a complete WYSIWYG framework.

The goal is to make the navigation of the Subject Area as easy as possible for the end-users to enable self-service analyses. The Presentation layer is completely masked from changes in the physical data. Therefore there is no need to re-build reports each and every time the physical data changes. Put this in context when you have a couple thousand reports within an application. The amount of re-work is mind-boggling.

Now we have almost completed (note “almost”) completed the metadata setup. All this is saved in a file stored in the File system with an extension of .rpd. This file is loaded by the BI Server when it starts up. This is the core of all the magic we are about to see via the front end.

The end user usually accesses the presentation service. The entire BI solution is light weight and is accessed via a browser. This will the very first screen they will be prompted with.

This will be the username\password of the .rpd we had created earlier. The default user name is “Administrator” with the password as blank.

After successful log-in the user is looking at the “Answers” module of OBI. This is the section where As-Hoc queries are designed and executed. The first step is the selection of a “Subject Area “. This is nothing but the parent folders we had defined in our Presentation Layer in the previous steps. In our case we just have one called “SkillDB”. If the solution was more complex we could have chosen to distribute the sources from the BMM (Business Model and Mapping Layer) into different Subject Areas, just to ease manageability.

After this selection we get to see the exact replica of different columns we defined in our Presentation layer.

We see all the columns are in Capital case.. not so pleasing to the eye. Remember I told you before “almost” completed with the .rpd setup. Now there are some neat tools within our BI Admin tool that can be used to do neat little tasks like this. We may have chosen to manually rename each of the columns, buy why do work when there is automation. The Rename Wizard is available under ToolsàUtilities.

You will see in the above figure all the elements are marked in red. This is because I have opened the .rpd in “online mode” and then checked them out before I make the changes.

After the rename process is done you will need to check-in the changes and refresh the changes in the presentation services before you see the changes. The “Refresh Display” and “Reload Server Metadata” options are available below the Presentation layer Table listing in the Answers page.

Now let’s get to some serious business of creating Ad-hoc reports and gaining insights into the excel data. I want to find out which of the employees are not performing so well. Add the following columns in the Criteria

· Employee, Gap from Employee Facts

· Skill Domain and Skill from Skills

Then click on the Pivot table option

All the columns in your criteria are grouped under rows by default. Using the simple Drag and Drop interface, distribute the columns as in the below figure

If you do not see the gap values, change the “Aggregation Rule” to Sum in the Measures section.

What we have ended up with is with a pivot table with the knowledge gap data. Though this is useful, it is not as intuitive as is expected of a BI tool.

We will now put in traffic lights as applicable to make the data more interpretable. We shall add conditional formatting to the GAP column. We will show a red, yellow and green traffic light based on the gap values of <0, 0 and >0 respectively. The steps of which are shown below.

Lo and behold below is the very user friendly report that is the result of the conditional formatting we placed above.

Some conclusions that can be deduced from the results are :

- Emp0000002 has exceptional Leadership qualities. Probably he\she must be encouraged to get into a managerial stream.

- Emp0000001 is lagging behind in most of the skills. Ideal to send this employee to trainings to fill in, the knowledge gaps. We can extend this report by adding conditional guided navigations that will guide the end user to an internal training enrolment page perhaps.

This report will go away as soon as we close the browser. We don’t want to lose all our hard work do we? So we go ahead and save this report (or requests in OBIEE terms). Before we can do so there is one small step we need to carry out. These request first needs to be added onto something called the “Compound View”. This is the view that enables the request to be published to the outside world. Multiple such requests may be added to the compound view. All requests can be of different types as well e.g. Graphs, Pivot Table, Simple Tabular view, Narrative, Simple Text etc

Steps to add to the compound view and saving the request are demonstrated below.

I have chosen to delete the tabular view that is added by default and just retained the Pivot table before saving this request.

By now the customer is pretty awestruck by the products capabilities. Drive home the point by added these requests to dashboards and demonstrating the ease of putting together very complex enterprise wide dashboards in very little time. You may add things like the view selector, Dashboard Prompts, Drill down Graphs etc to demonstrate the sophistication of this tool.

Now is the time to talk to the customer of other advanced topics like Star Schemas, Caching capabilities, Aggregation setups, query federation, security models, Multi user development environments, BI Publisher, Disconnected Analytics, Oracle Delivers (e.g ibots), BI Office Add-In etc

To conclude these are the points that needs to be driven in about OBIEE at the end of this demo

- Simplifies BI tools infrastructure

- Integrates BI foundation with IT architecture

- Offers consistent view of information\single-source accountability

- Improves customer choice for BI solutions

.JPG)